2025-12-11 13:58:08

1. Benchmark Execution Summary

1.1. Session

-

Hostname: gaya

-

User: gaya

-

Time Start: 20251211T135811+0100

-

Time End: 20251212T002724+0100

1.3. Parametrization

| Hash | tasks | discretization | Total Time (s) | |||

|---|---|---|---|---|---|---|

🟢 |

0c0d9679 |

128 |

P1 |

2630.907 |

||

🟢 |

1aad25f9 |

8 |

P1 |

1058.260 |

||

🟢 |

1c58710c |

128 |

P2 |

2844.602 |

||

🟢 |

2bee4b08 |

64 |

P1 |

2353.527 |

||

🟢 |

54cc6124 |

32 |

P2 |

2305.390 |

||

🟢 |

6016d433 |

256 |

P1 |

37474.588 |

||

🟢 |

71659d70 |

32 |

P1 |

1989.690 |

||

🟢 |

77eb0048 |

16 |

P1 |

1457.834 |

||

🟢 |

888f7b72 |

64 |

P2 |

2567.229 |

||

🟢 |

a285793a |

16 |

P2 |

1907.830 |

||

🟢 |

ac8f4cc2 |

4 |

P1 |

320.882 |

||

🔴 |

bbf416a6 |

4 |

P2 |

870.761 |

||

🔴 |

f4c5efcd |

8 |

P2 |

1373.874 |

||

🟢 |

fb46b4da |

256 |

P2 |

37751.945 |

2. Benchmark: Thermo-Electric Coupling

2.1. Description

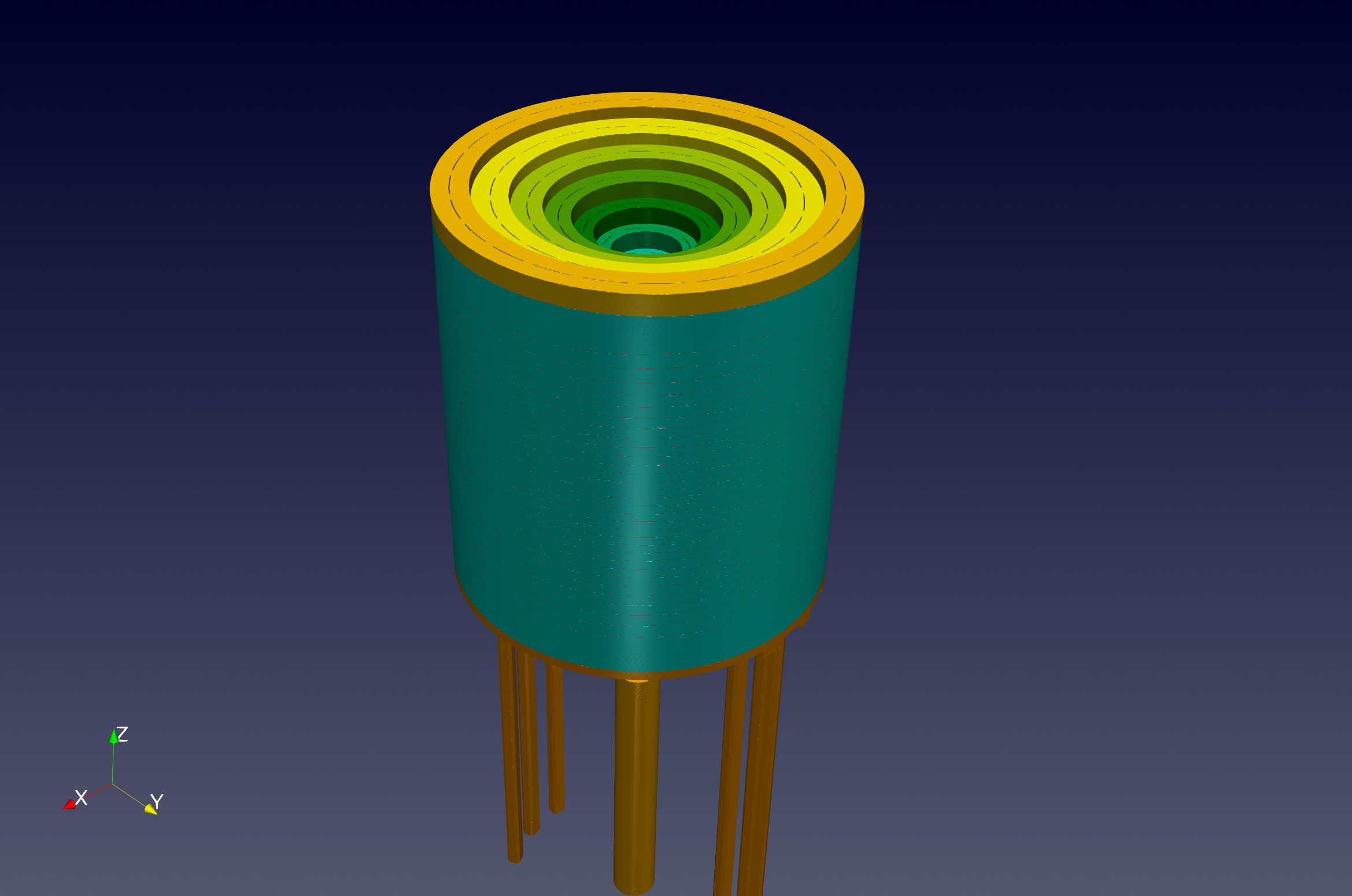

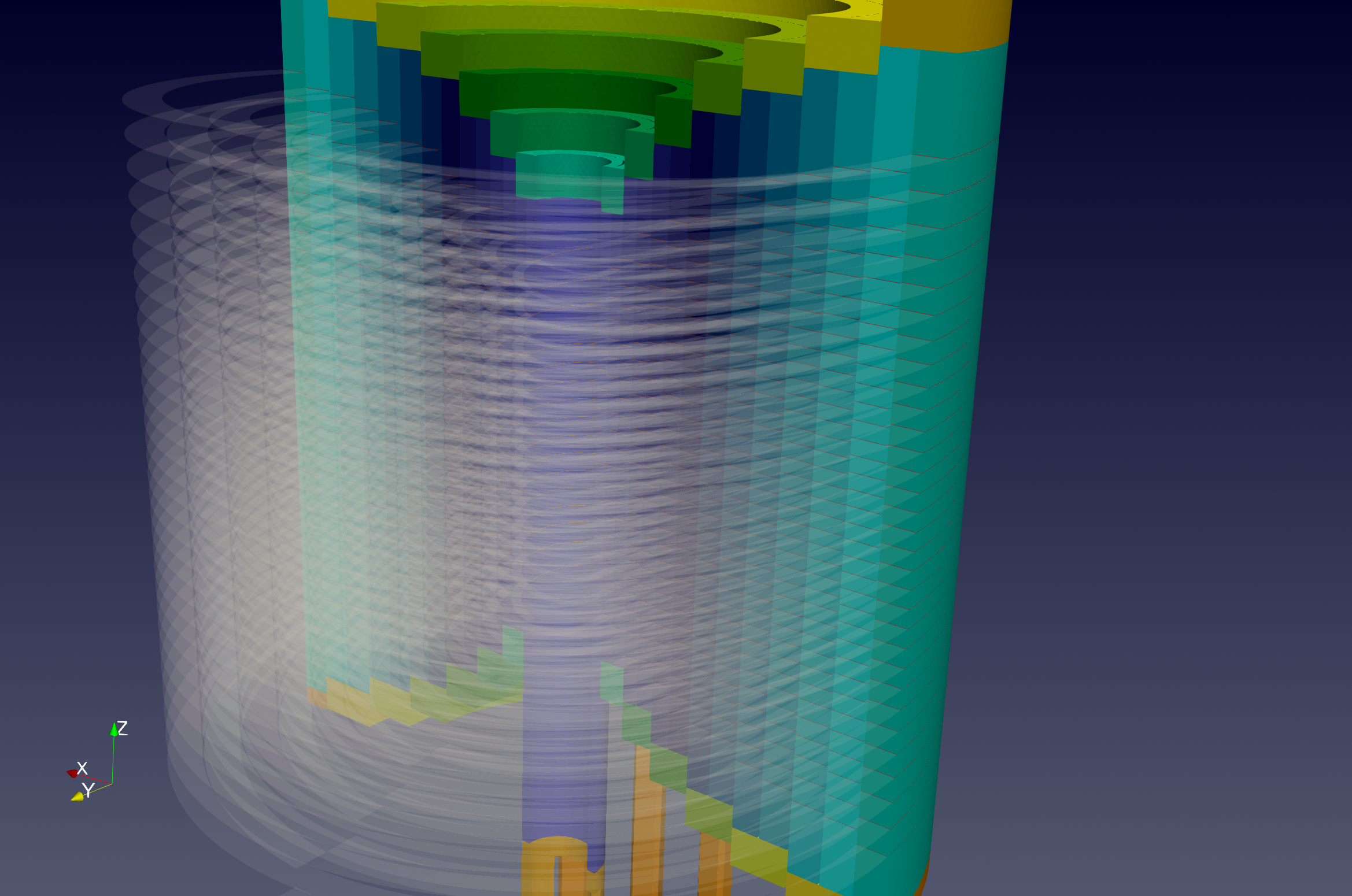

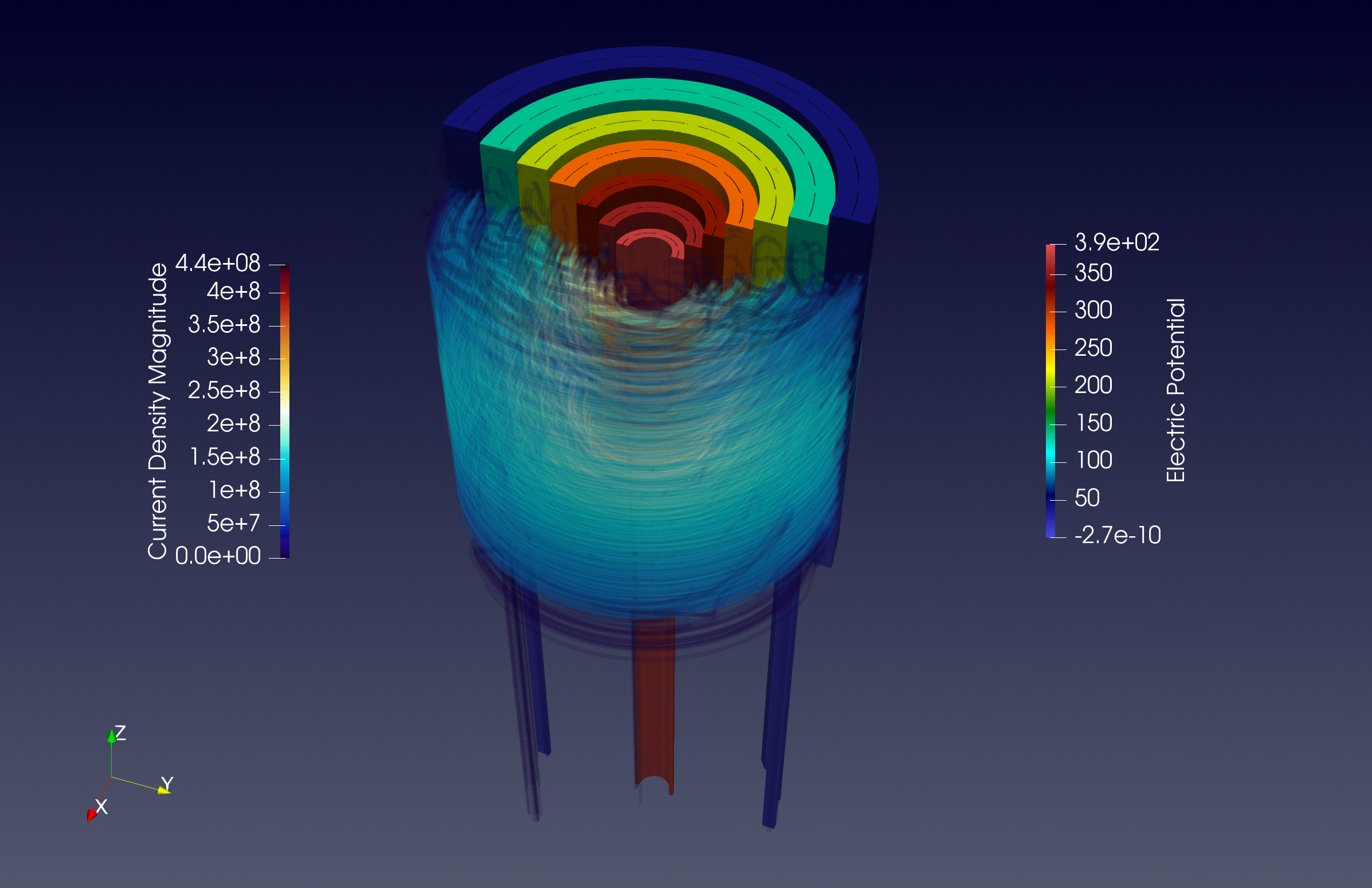

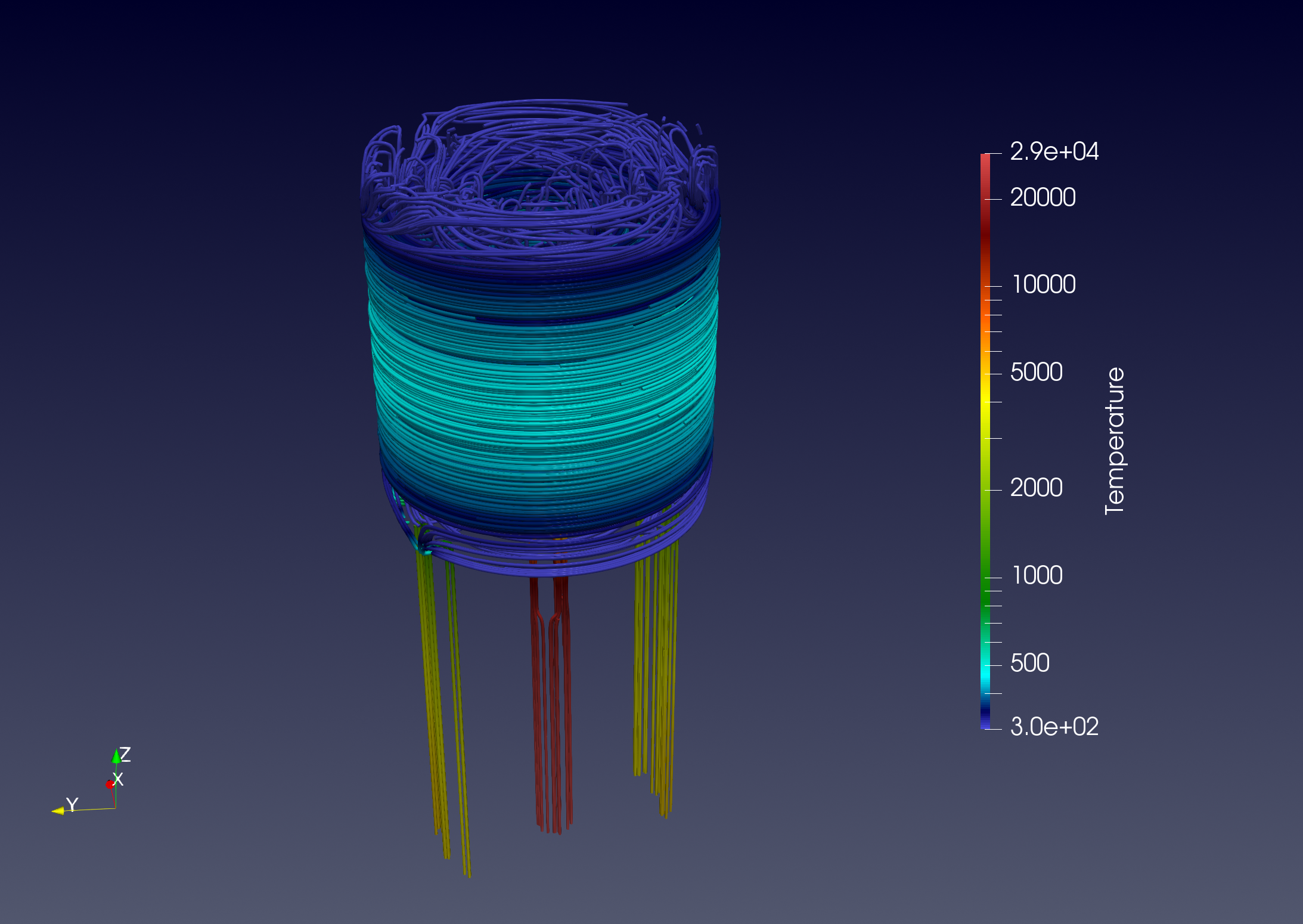

This benchmark models the temperature field and electric current distribution in an high field resistive magnet of the Laboratoire National des Champs Magnétiques Intenses. The magnet consist in a set of 14 copper alloys cylindrical tubes connected 2 by 2 into series by rings. In each tube, the current path is defined by 2 helical cuts of 0.2 mm width. The rings are machined to let water flow in between each tube in a channel of 0.8 mm. The magnet is operated at 12 MW with an imosed total current of 31 kA. The water flow in the magnet is about 140 l/s. The water cooling of the magnet is modelling by using Robin boundary conditions with parameters derived from classical correlation in thermo-hydraulics. A more detailled version of the full model is available in (Daversin Catty, 2016; Hild, 2020). The model is run with thermoelectric Feel++ toolbox. The geometry used in this benchmark performance is illustrated in Figure 4.13. This is a complex domain composed of a large number of components, with some very thin parts.

2.2. Benchmarking Tools Used

This benchmark was done using feelpp.benchmarking, version 4.0.0

The benchmark was performed on the gaya supercomputer (see Section 10.1). The performance tools integrated into the Feel-toolboxes framework were used to measure the execution time. Moreover, we need to say that we have used several Feel installations.

-

Gaya:native application from Ubuntu packages of Jammy OS.

-

Discoverer: Apptainer with Feel++ SIF image based on Ubuntu Noble OS.

Note: the Feel++ version is identical but the dependencies (like Petsc) which are of course more recent with Noble.

The metrics measured are the execution time of the main components of the simulation. We enumerate these parts in the following:

-

Init: load mesh from filesystem and initialize solid toolbox (finite element context and algebraic data structure)

-

Assembly: calculate and assemble the matrix and rhs values obtained using the finite element method

-

Solve: the linear system by using a preconditioned GMRES.

-

PostProcess: Export on the filesystem a visualization format (EnsighGold) of the solution and other fields of interest such as current density and electric field.

2.3. Input/Output Dataset Description

2.3.1. Input Data

-

Meshes: We have generated one mesh called M1. These meshes are stored in GMSH format. The statistics can be found in Table 4.6. We have also prepared for each mesh level a collection of partitioned mesh. The format used is an in-house mesh format of Feel based on JSON+HDF5 file type. The Gmsh meshes and the partitioned meshes can be found on our Girder database management, in the Feel collections.

-

Setup: Use standard setup of Feel++ toolboxes. It corresponds to a cfg file and JSON file. These config files are present in the Github of feelpp.

-

SIF image (Apptainer): feelpp:v0.111.0-preview.10-noble-sif (stored in the Githubregistry of Feel++)

-

Ubuntu package Jammy (Native) feelpp:v0.111.0-preview.10

| Tag | # points | # edges | # faces | # elements | P1 (Heat) | P2 (Heat) | P1 (electric) | P2 (electric) |

|---|---|---|---|---|---|---|---|---|

M1 |

4.31E+06 |

2.79E+07 |

4.60E+07 |

2.24E+07 |

4.31E+06 |

3.22E+07 |

4.30E+06 |

2.95E+07 |

2.4. Results Summary

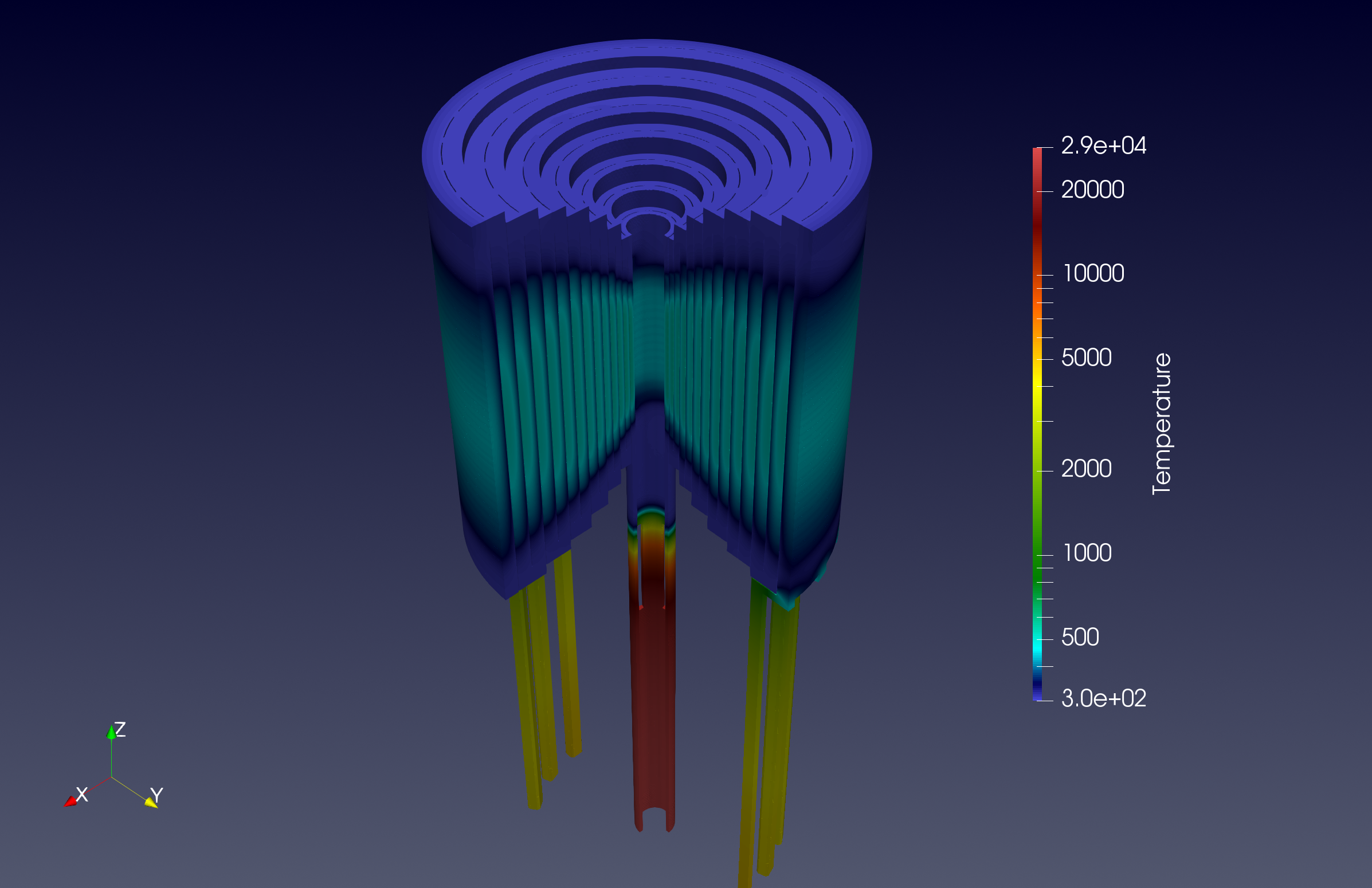

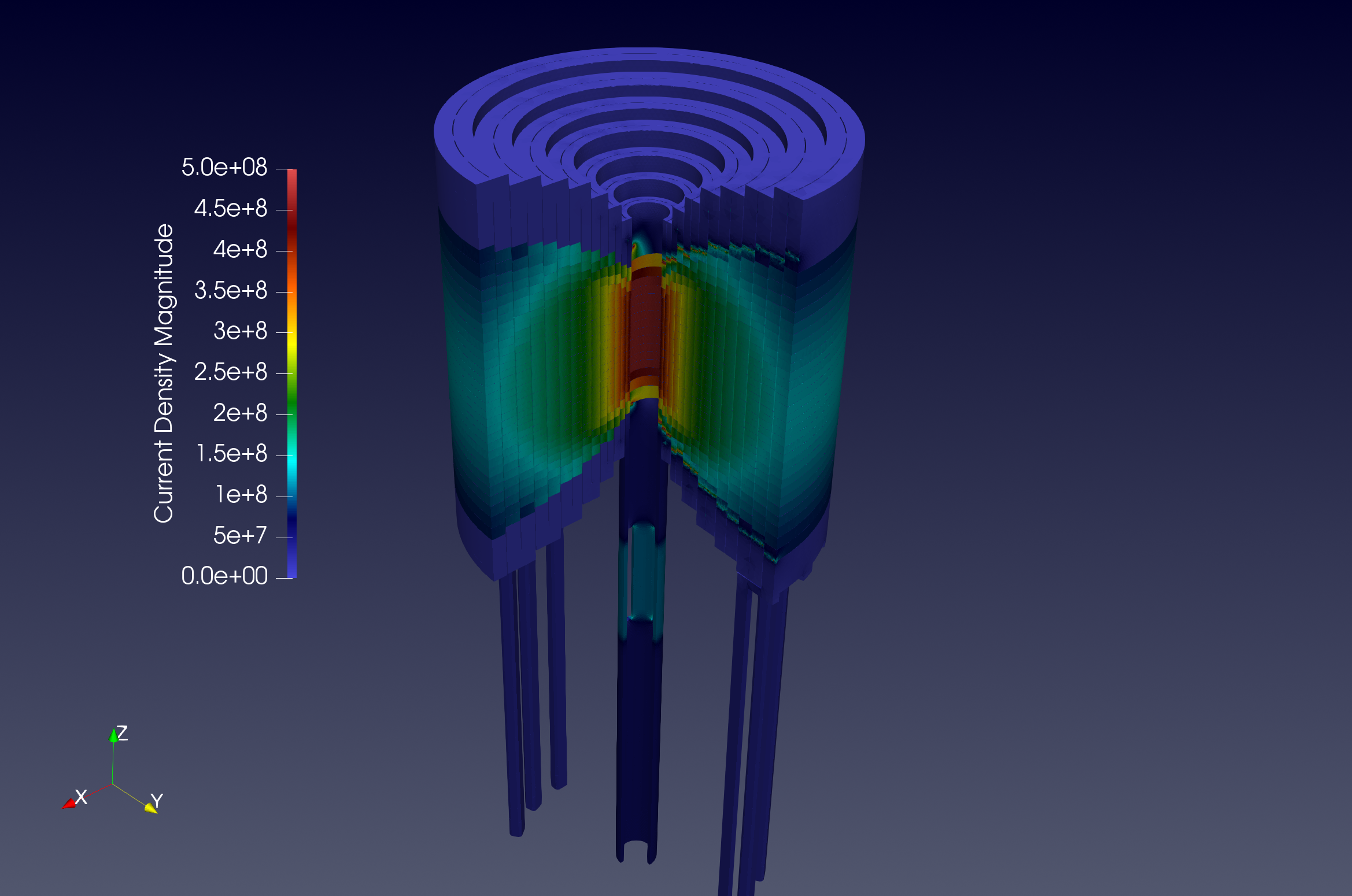

We can find in fig. 4.14 some examples of 3D visualization that represent fields obtained by solving the thermoelectric problem. We have the temperature solution and other quantities evaluated at PostProcess phase such as current density, electric potential, and electric fields.

The performance analysis has been done in two supercomputers, Gaya and Discoverer. For each of them, we have run the benchmark with discretizations P1 and P2. About the Feel++ distribution, we use native Ubuntu Jammy packages for Gaya, and Apptainer (with Ubuntu) for Discoverer. The results on these machines are illustrated in figs. 4.15 and 4.16 respectively. The measures presented are the time-consuming of simulation components related to WP1, namely Init, Assembly, and PostProcess. For the Solve component, we refer to section 6.2.3.3.

In each case analyzed, we have very good scalability, in the sense that we reduced significantly the execution time by increasing the number of tasks. The Init phase is the most timeconsuming, with a limit quite high compared to others. This part contains the mesh reading from the disk, which can explain some bumps sometimes when require many HPC resources.

To conclude, we can say that we successfully ran this benchmark case, and we are ready to go up to larger problems and more complex modeling.